I was so excited to get access to Search Labs today to start playing around with Google’s Search Generative Experience (SGE). The SGE is Google’s response to Bing chat which was demoed at Google I/O on May 10th. Search Labs is rolling out access to people who joined the waitlist, starting today.

The I/O demo didn’t include very many responses, but its not like they could in such a short time. But that demo had a lot of search engine optimization professionals (SEOs) reeling. So far, I’ve felt pretty good about what I’m seeing in SGE from an SEO and consumer standpoint, so I want to try to put some of those initial concerns to rest.

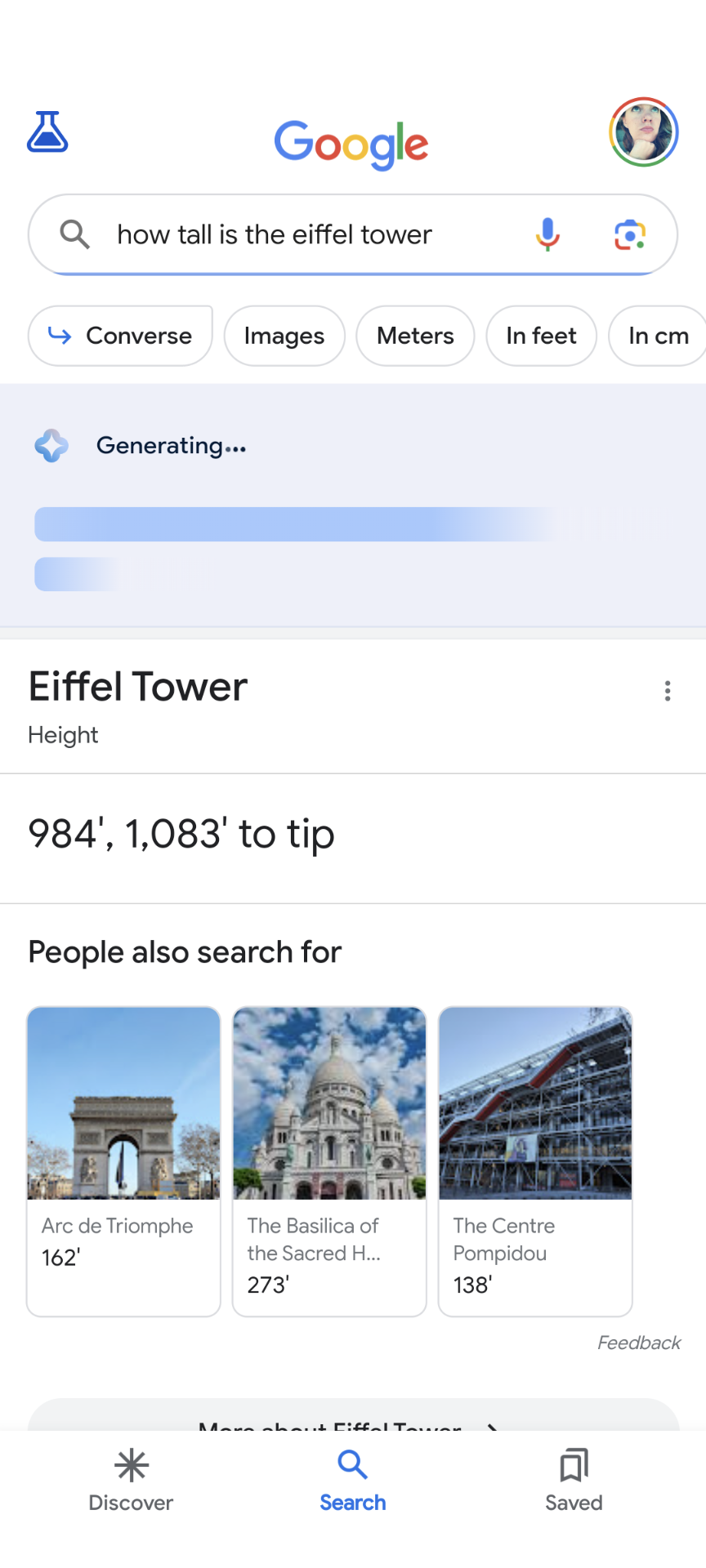

Concern 1: This will only make zero-click searches even worse

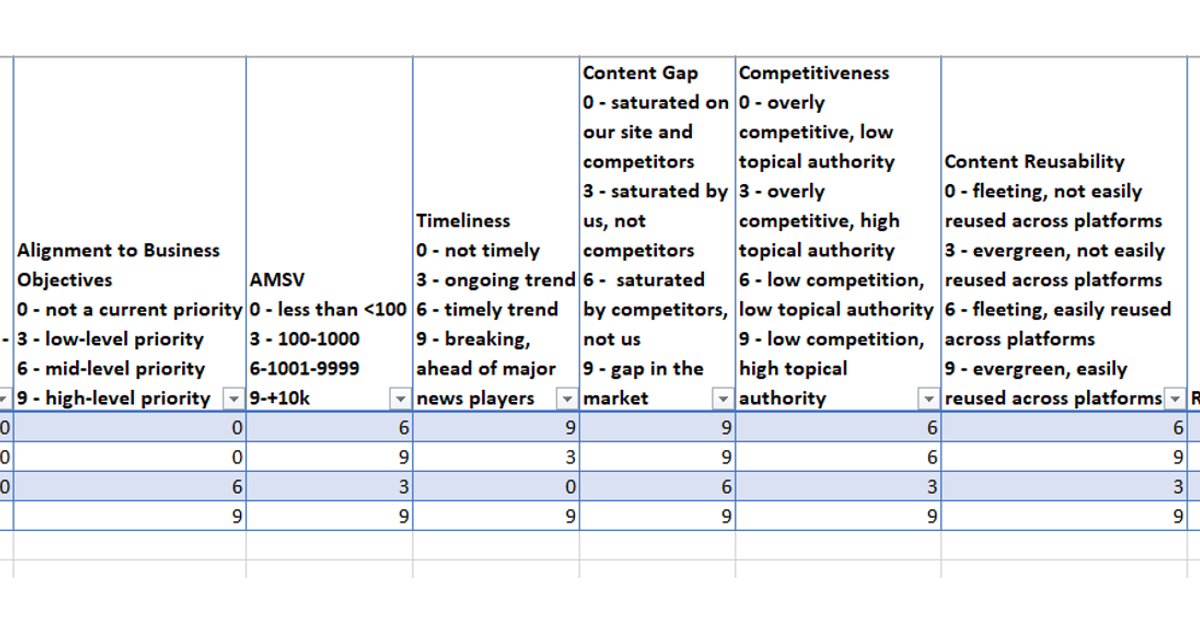

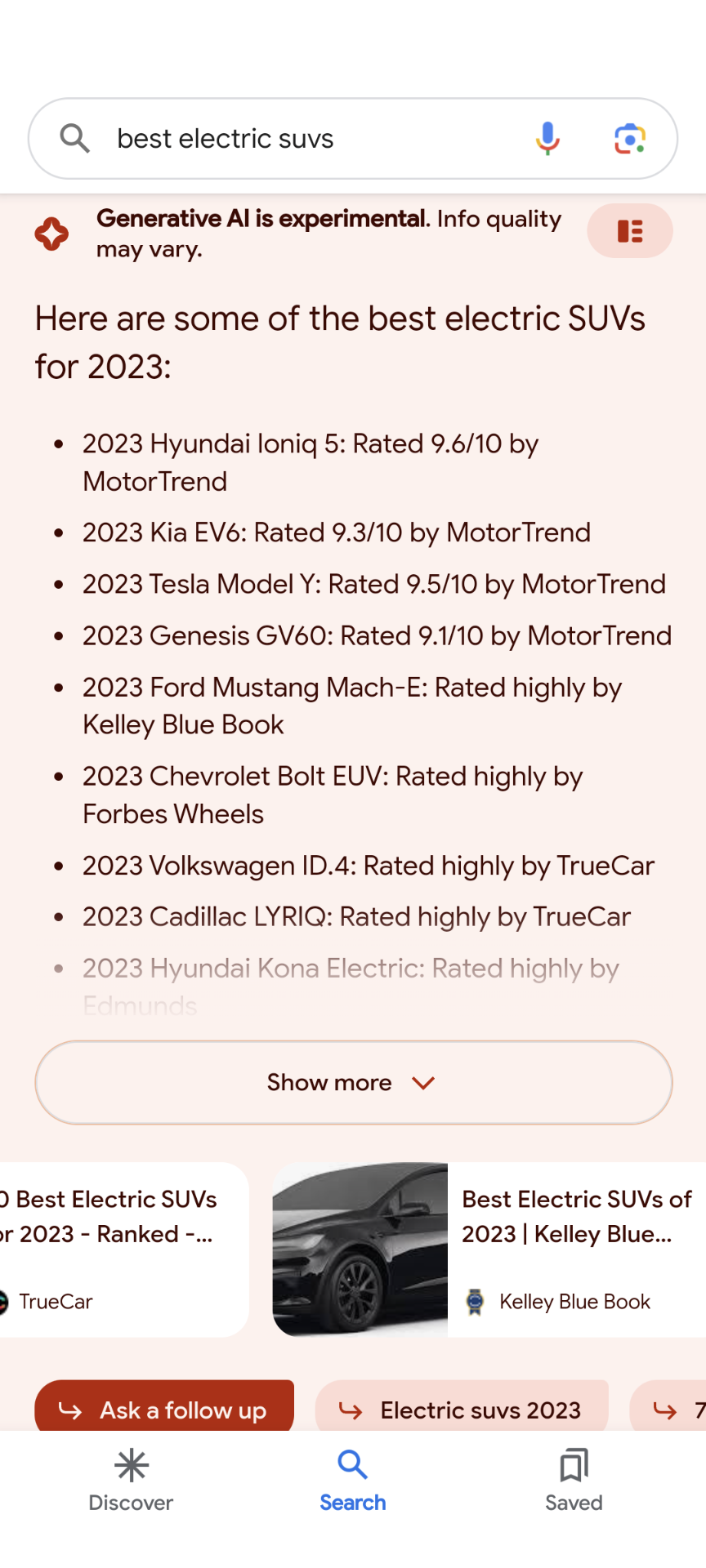

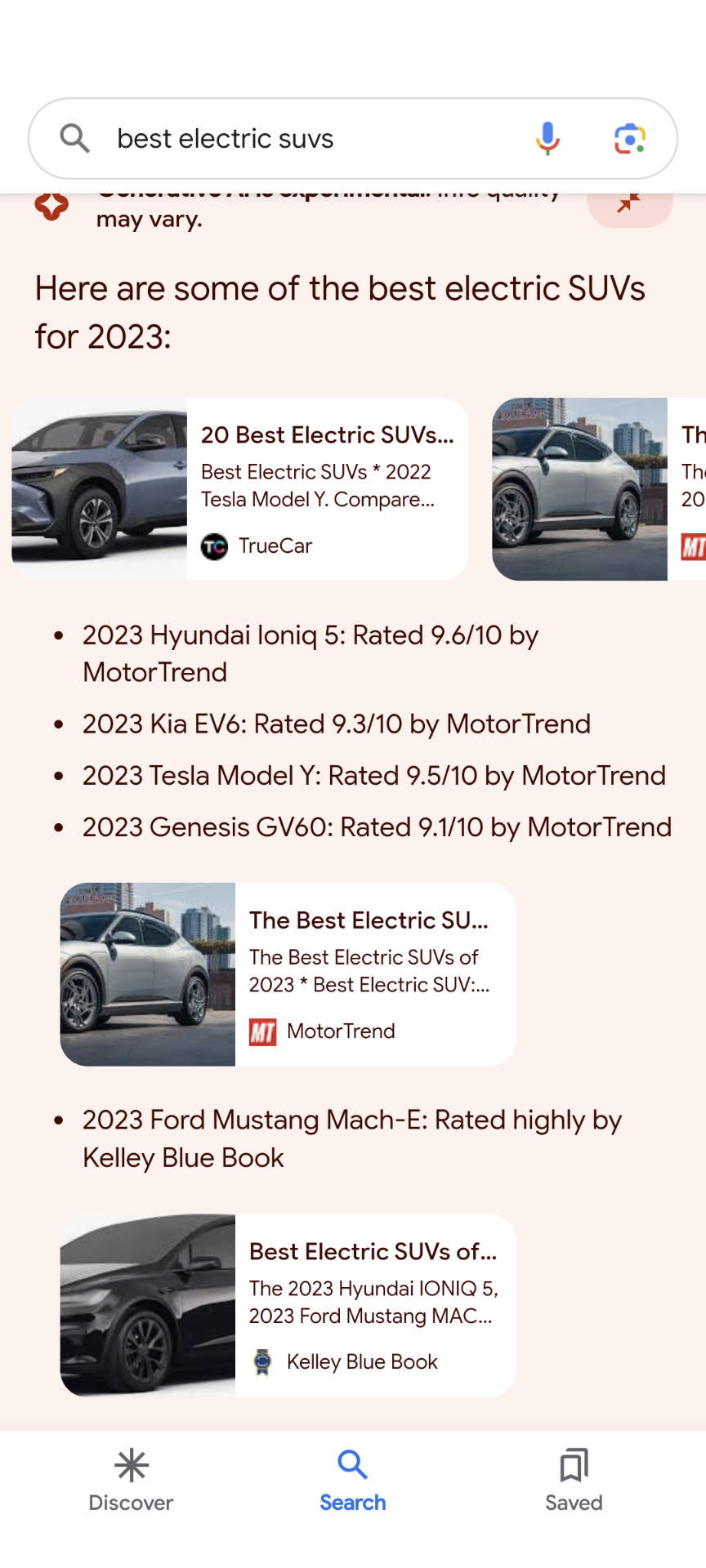

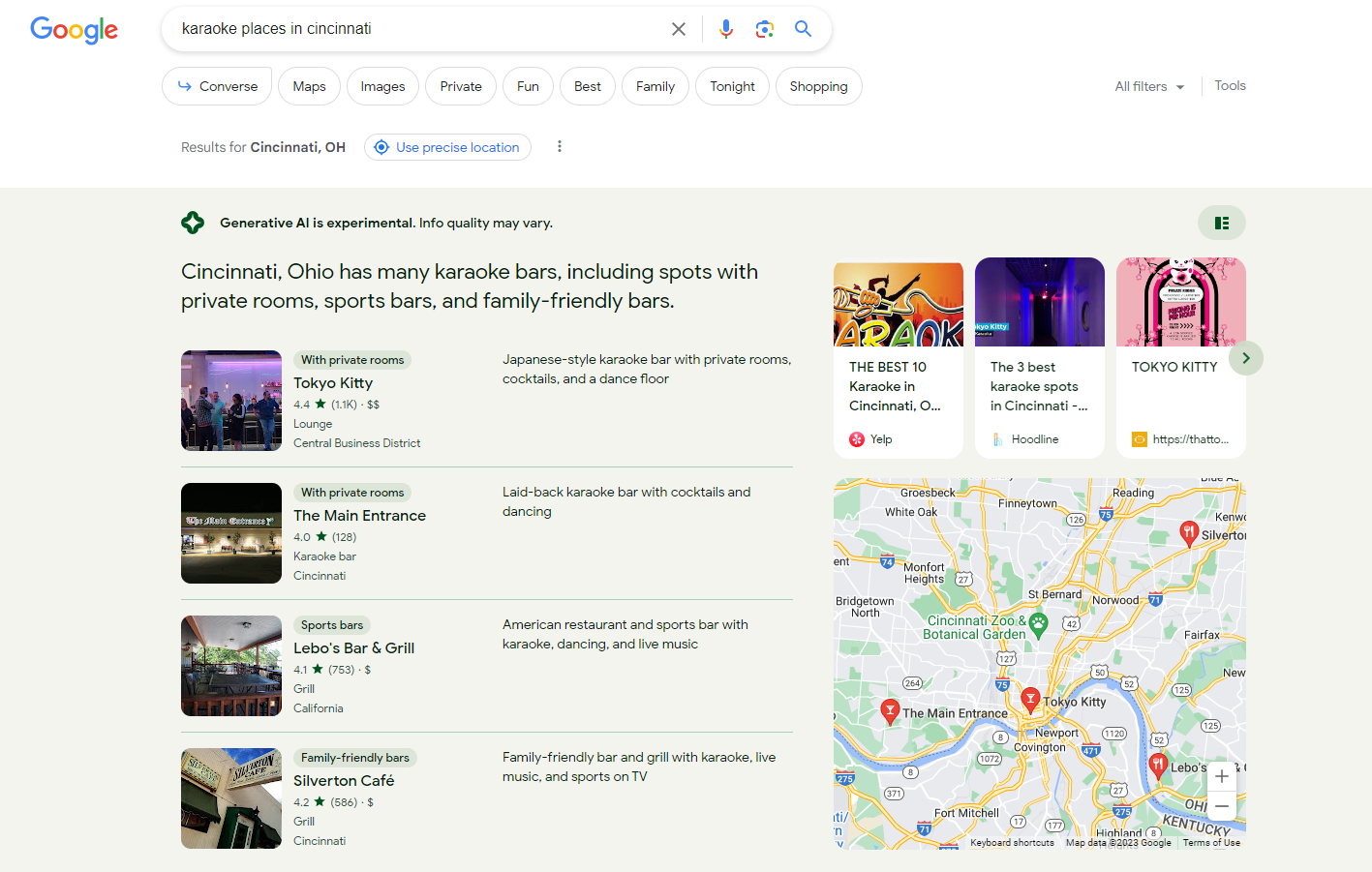

When Google’s chatbot Bard rolled out, SEOs were concerned about the lack of citations, especially since Bing Chat included links to sources. You can read more about that in a post I did for my master’s program’s blog.So during the I/O demo when article thumbnails were shown in the AI response, many were happy.

I’m here to tell you that I’m even happier with what I’m seeing in the SGE myself! In some cases, I’ve seen carousels of 8 articles shown. Will users scroll through all of those? Maybe not, but it does give more opportunities to be seen “above the fold,” especially on mobile.

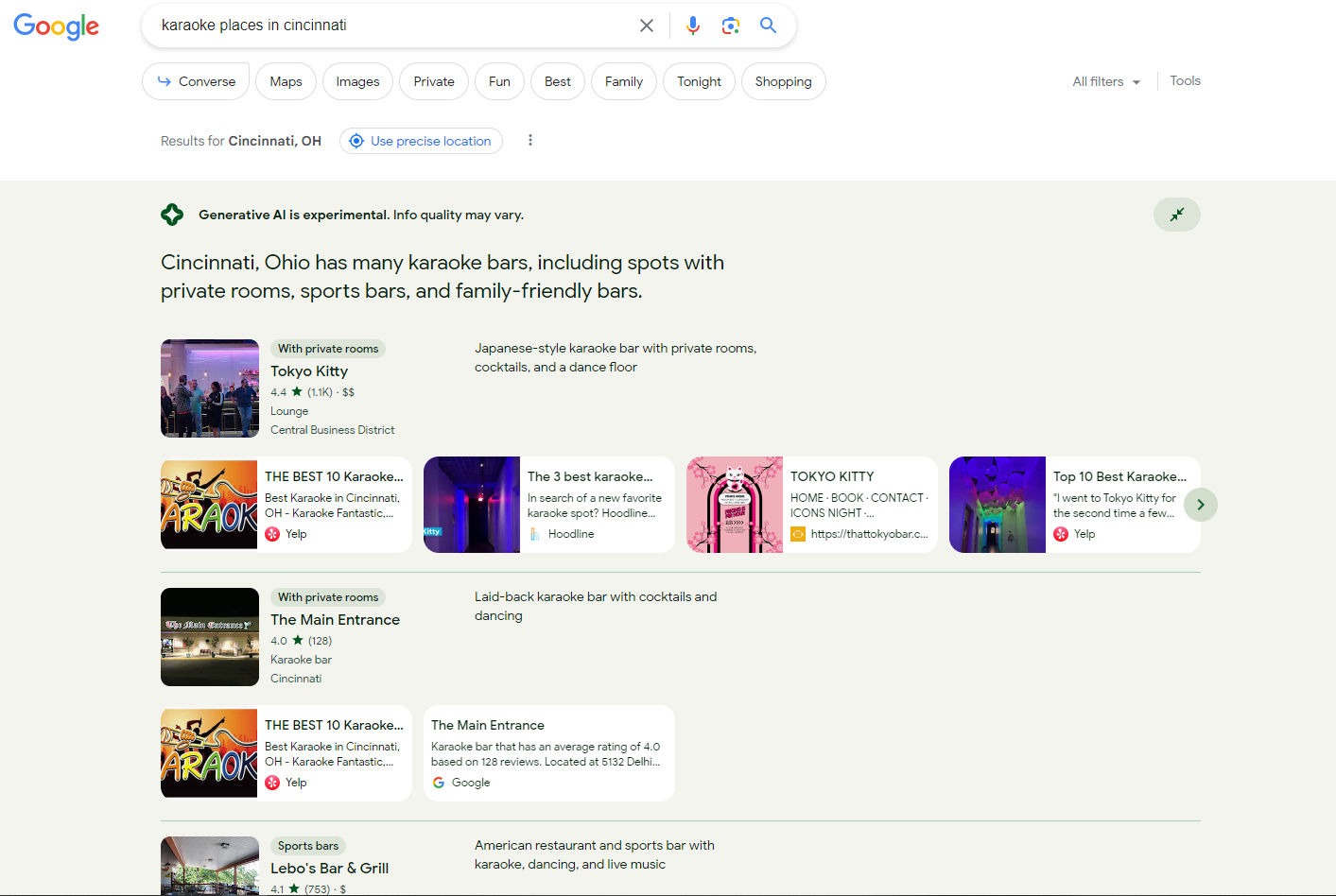

Even better the SGE offers different ways to view the response. The default view uses the carousels, but what I’m calling a “grid” view based on the image used to toggle (in the top right of the AI response) it is way better! I like it as a consumer because it gives us a better idea of what resources were used to pull what information shared in the SGE response. Plus, it pulls your potential link out of the carousel for potentially more visibility.

I think this “grid” view is even better with local results because it shows articles that mention the local business in whatever categorization Google has provided to it as well.

If we get Google Search Console data on this, the carousel vs. non will completely mess up impressions on the same query. Links hidden in a carousel are not counted as an impression unless they are shown, so if the carousel is dropped then an impression would hit. Could a toggle create duplicate impressions like it does in the Job/Event lists?

So how frequently does the SGE appear?

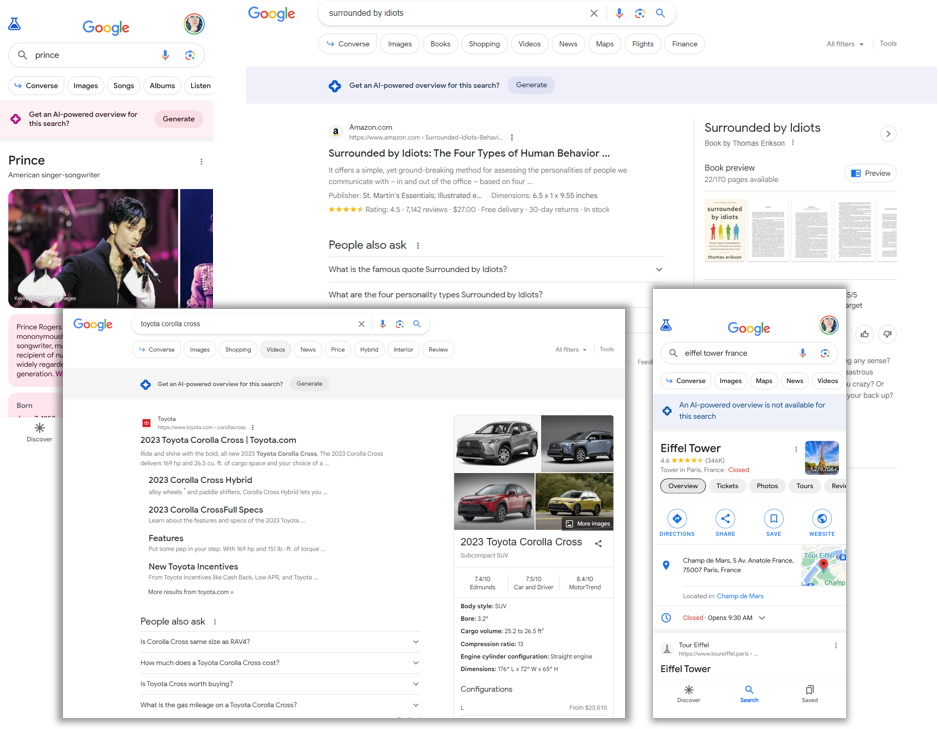

As with anything in SEO, it depends. Device type seems to come into play and of course intent/query does as well.

I rapid fired queries beyond what is mentioned or pictured in this post. I tested these 22 queries on both desktop and mobile. Desktop didn’t show SGE at times when mobile did.

An AI response wasn’t available on either desktop or mobile–secure a car loan. This lack doesn’t come as a surprise as Google said it would treat “Your money or your life” very carefully. However, for “is keto healthy” a response was given. On mobile there was a clear disclaimer at the top indicating this is best discussed with a doctor, but the disclaimer was missing on desktop.

Two other queries had mixed results between mobile and desktop. “Toyota Corolla configurations” on desktop did not offer an SGE at all, but it auto-populated on mobile. “Current deals on Toyota Corolla” did not auto-generate on desktop but did on mobile. I’ll share more about a pattern for when an SGE is not auto-populated under the next concern.

Concern 2: Featured snippets or the Knowledge Panel will go away

Featured snippets exist! And when it takes a while for an AI response to show up, they are pretty prominently shown.

It does seem like Google is not automatically triggering the AI response when a Knowledge Panel (KP) is available. See below several different KPs where the SGE is not automatically triggered. I tried many national sports teams from different sports and those results didn’t even offer an SGE option.

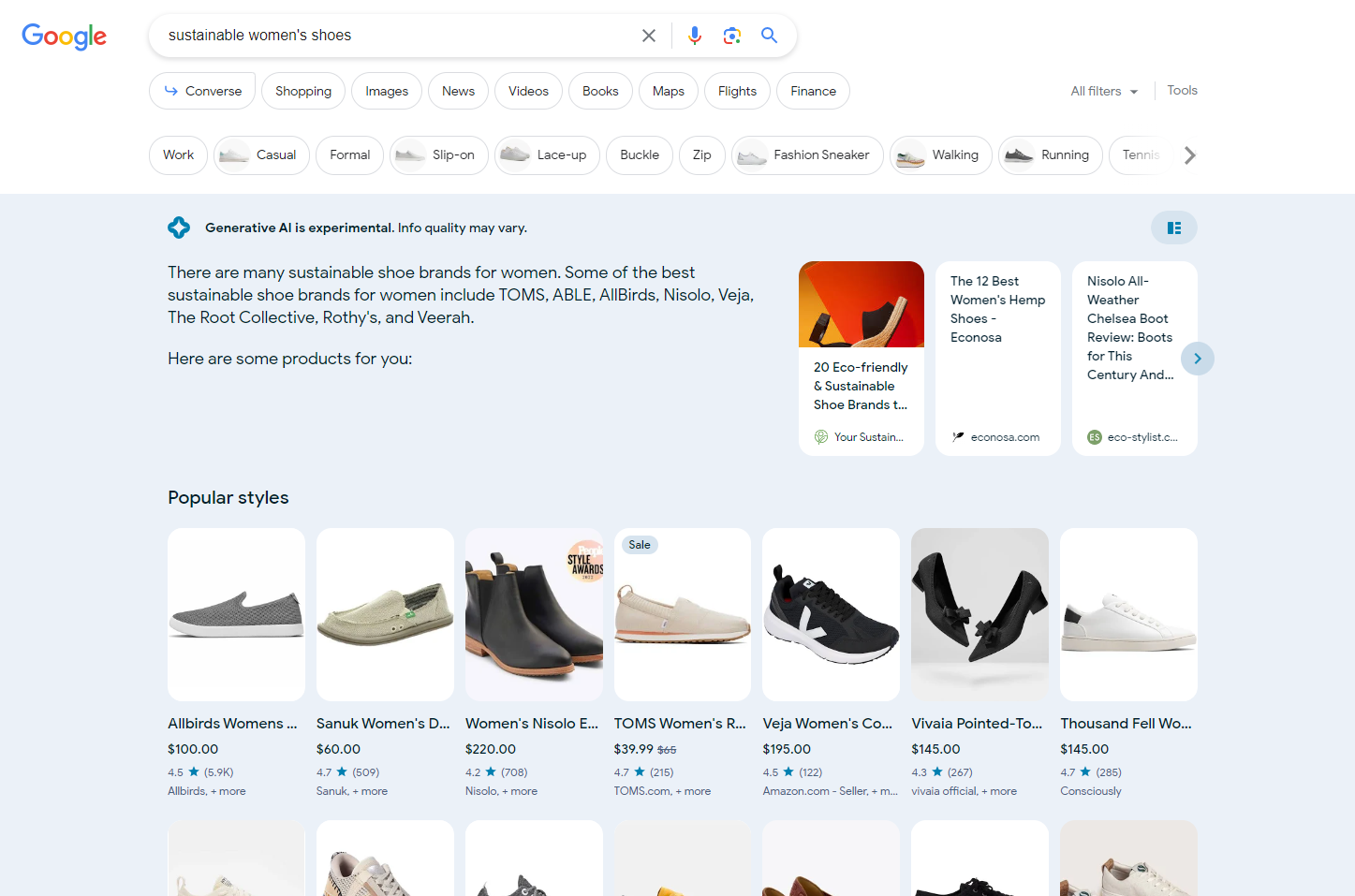

Concern 3: Publishers are doomed on transactional terms

While, yes as shown in the I/O demo, products make up the bulk of any shopping AI responses. I’m seeing a carousel of articles–like those Interesting Finds that appeared in the past on shopping terms.

However, I’m also seeing these generate empty citations too. Possibly this means there is an opportunity for a publisher to create content that could be featured here or the experience is still lacking in generating proper sources.

Concern 4: Ads will take all of the clicks

During the I/O demo, it appeared that shopping ads were showing ABOVE the AI response box. Personally, I felt that would make Ads even more sought after, thus more competitive. But I’m seeing them pushed down, as pictured in the two images in the previous concern.

Other Thoughts about SGE

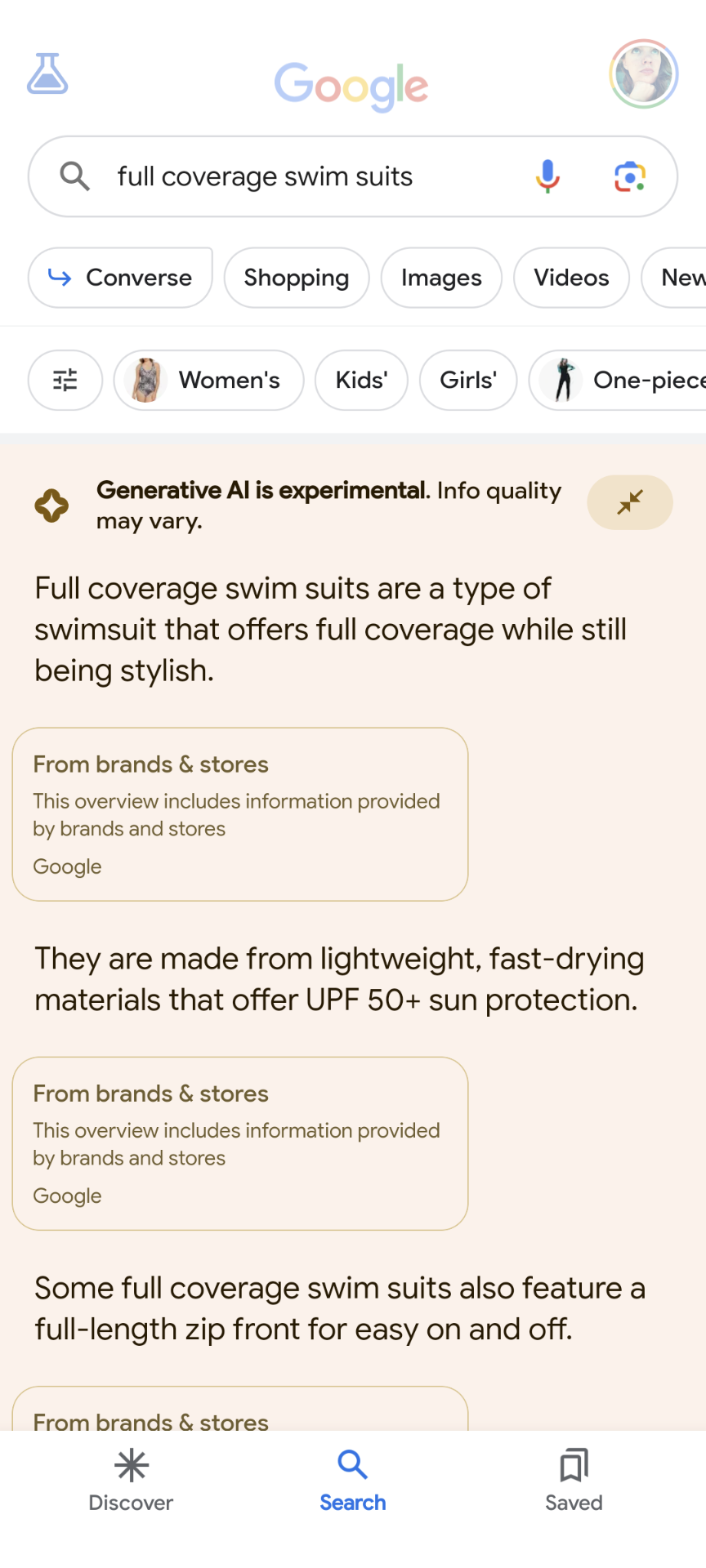

Local Results

On mobile, local results lack a map. I hate this. As a consumer, I like seeing the landmarks and cross streets to get a better sense for where something is in relation to other things I’m aware of. I first started testing on mobile, so I was happy to see the map does show up on desktop. However, when the grid view is toggled the map disappears (as shown above).

Lists Within Responses

Sometimes the AI response makes these nice lists, like the ones in the local results. Those results link over to the local knowledge panel for that business. However, other lists seem like they should be clickable and are not. The following image was disappointing that these did not link over to the websites. Instead, the images opened the image search result, and many images were not even from the site mentioned.

Mixed Intent

Where Google has always had mixed intent, SGE makes it even worse. General queries, like Ford, generated a local SGE for Ford dealers. Basically Google jumped a likely navigational intent to a transactional. Didn’t seem fitting.

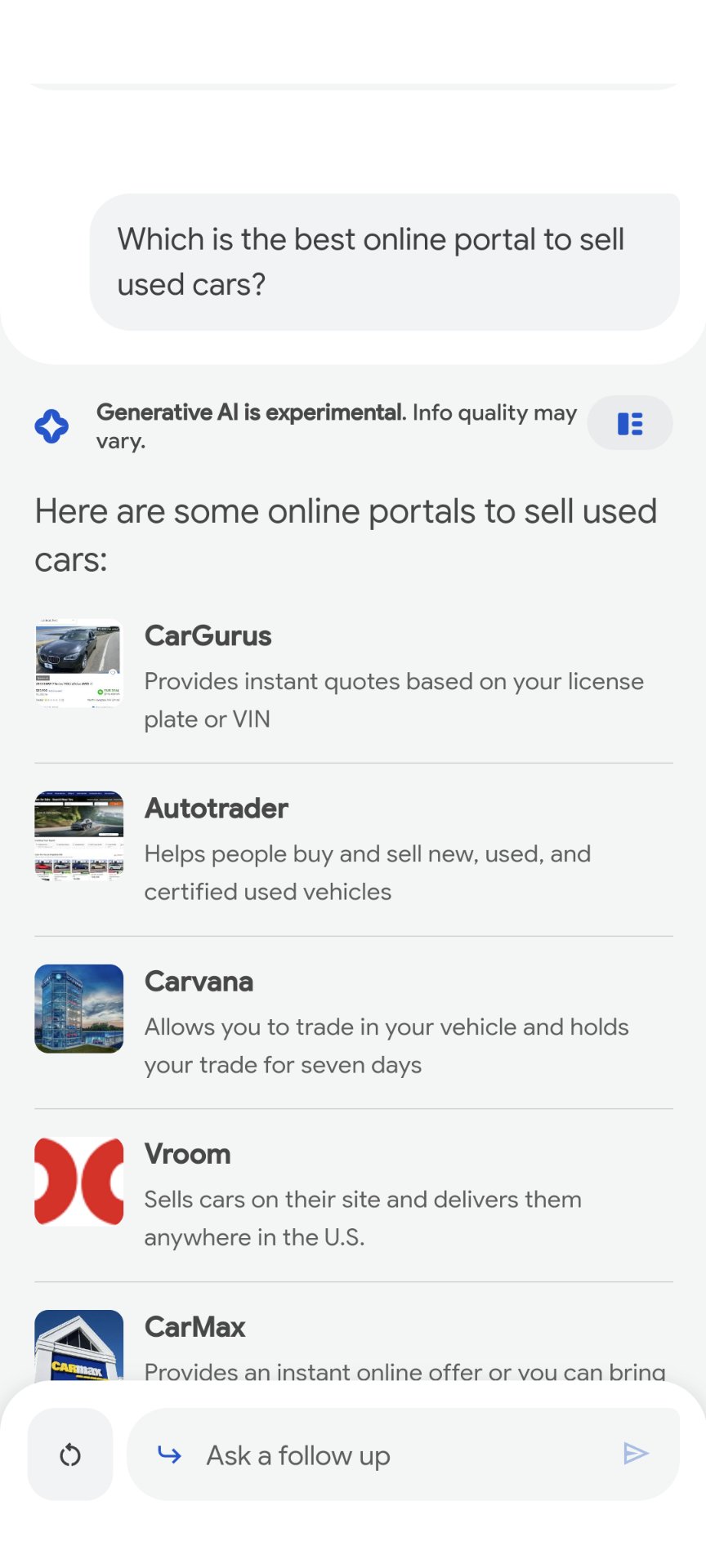

Conversation Mode

I was quite pleased to see that conversation mode kept a similar feel to the SGE. After your initial prompt, results with links to sources were generated plus a few organic results would show underneath if you keep scrolling then with a prompt to see more search results.

Colors

Don’t be a fool and spend time pondering over the meaning behind the colors of the response box. After a long while, I realized they are based on the colors visible in the normal search results, like an image or logo.

Caveats to accessing SGE

I’m not able to get SGE to show up in search on mobile Chrome. I have to use the Google app in mobile. Also, that means you can’t use the mobile emulator on desktop to get the experience either (I tested).

As with the evolution from 10 blue links to the current rich-results heavy SERPs, Google will continuously adjust the SGE. What we are seeing now will likely not be exactly how it actually launches. But what I’ve seen has felt less “doom and gloom” than some may have expected. Plus, I see any change like this as an opportunity to keep learning and growing!